This tutorial is going to be about creating your own datamosh-like shader, and will be going over two very useful techniques for Unity shader effects: motion vectors & pixel recycling. Datamoshing refers to the artifacts produced by modern digital video compression techniques, where small motion vectors control displacement of blocks of pixels instead of sampling a fresh frame on every single frame. It’s quite an interesting technique, and it’s fun to try reproducing the artifacts produced by it.

QUICK NOTES:

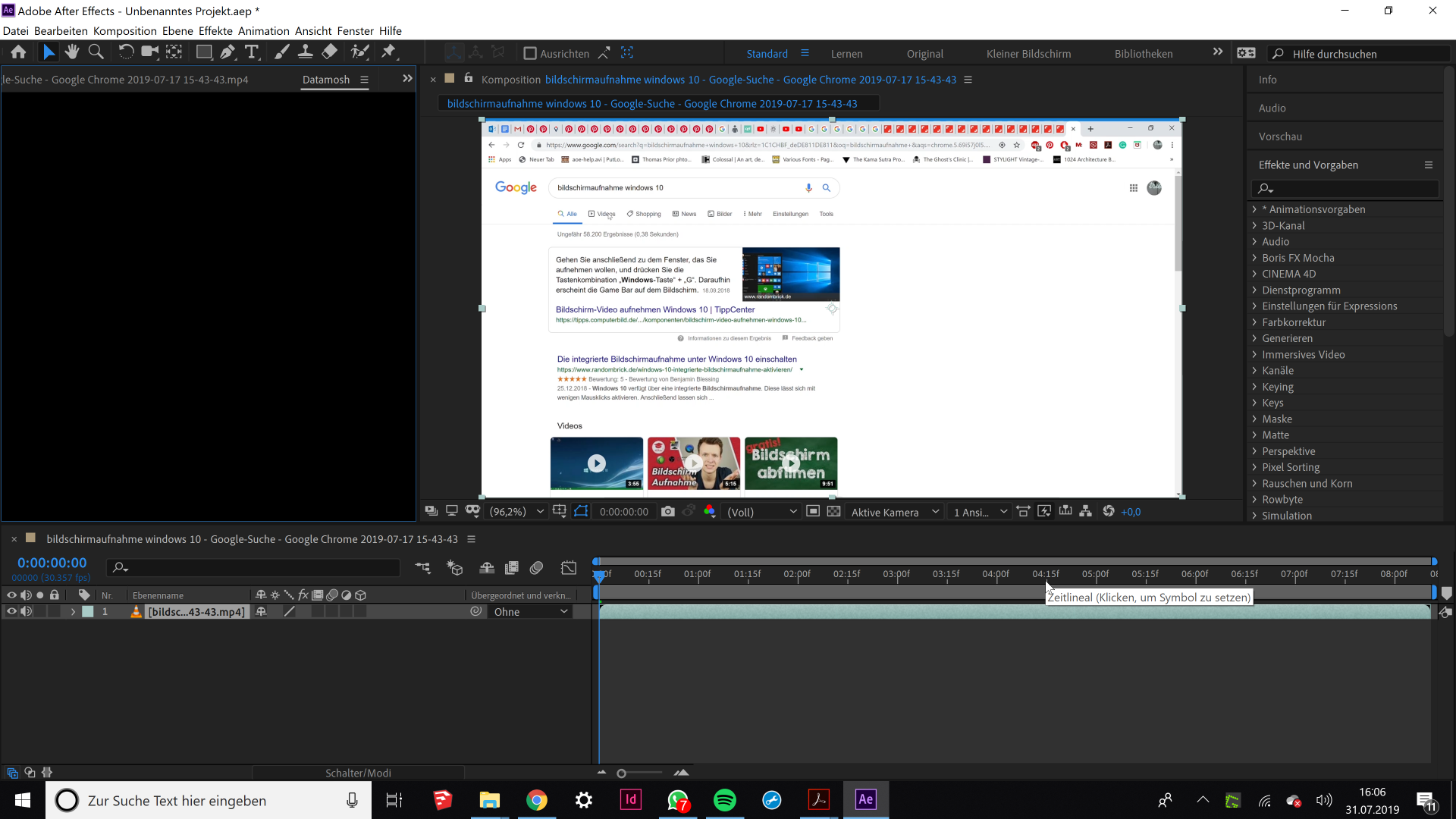

Avidemux is a free tool commonly used for datamoshing. You can also do basic video editing with it, though many people edit the original videos in some other software (e.g. Here are some good introductions to Avidemux: How to datamosh videos Datamoshing. Problem has been solved, check the edit at the end of the post. I've read the tutorials, I know all about duplicating frames, using motion vectors and bloom, ensuring how many or how few I-frames exist, how to delete them, blah blah blah.

Unity’s internal motion vectors only work on some platforms, and will require RG16-format (or RGHalf) rendertextures to be working on the target platform to render. Some platforms, like WebGL, do not support this at the time of writing, though it may be possible to write your own motion vector system.

Pixel recycling (or feedback, or continuous computation, etc…) is incredibly useful for producing interesting/dynamic feedback loop effects, as well as extremely valuable for simulating physics and other complex computations with accumulative data (as opposed to data that’s disposed of at the end of each frame).

A number of projects of mine make extensive use of this technique, and because it relies only on preventing Unity from clearing data from the pixel buffer, it’s pretty easy to get working on all platforms.

There are a number of ways you can set this up in Unity to read back pixels from the previous frame (another method is explored here in a tutorial by Alan Zucconi), but here we’ll be exploring a simple method that uses Unity’s GrabPass function to do this entirely in the shader script.

To start, I’ve created a scene with geometry and a first person character controller. Feel free to use whatever you want as long as you have some way of moving your camera when playing to check the motion vectors, and geometry/meshes in your scene. Also make sure that whatever materials you have in your scene either use shaders that use Unity’s standard surface shader system or write to depth/motion vectors.

First, let’s get the core of the script out of the way.

The Start function includes a line to tell Unity to generate a motion vector texture for the current camera, and then a line in OnRenderImage to render with an image effect material.

To get started with the shader, let’s try to visualize the motion vectors so we can get a better understanding of what kind of data it will provide us with. The fragment shader is as follows:

Add this shader to a material, then add the script to your camera and the material to the DMmat variable on the script. Now when you move your camera in scene space (or if objects in view have movement relative to the camera), you should see motion vectors visualized as color, but only during movement.

Motion vectors provide you with a texture with two channels that calculate the positional difference objects render in camera space between this frame and the previous frame.

Motion Vector value chart:

(values in relation to previous frame)

G- represents Y-

R- represents X- | R+ represents X+

G+ represents Y+

R@0.0 & G@0.0 represents no motion

Datamosh Studio For Macbook Pro

Now that we have access to motion vectors for each onscreen pixel, let’s use it to create UV displacement.

This is actually the basis for the datamoshing effect. All it’s actually doing it displacing pixels from the previous frame based on vector motion, similar to how video compression codecs allow us to skimp on providing samples for every pixel every frame and instead just displace previous pixels based on computed motion vector blocks to simulate motion at much smaller file sizes. The only thing that’s missing is recycling pixels from the previous frame.

Before we start, let’s go back to the script and add control for a global shader variable so that we can enable and disable using recycled pixels at any time.

Now back in the shader, we need to add a GrabPass pass just before the main shader. This will grab all onscreen pixels before rendering the effect, and is usually useful for displacement effects and for materials that render on objects that will need to read and modify pixels the object obfuscates. Here, we’ll be repurposing it for recycling modified camera pixels from the previous frame.

Just before the main pass, add this:

This will write the grabpass texture to _PR (pixel recycling). Now, in the fragment shader:

Now you have your basic datamosh effect! The shader will start recycling the same pixels from the previous frame once you hold down the left mouse button, displacing them further each frame by the motion vectors, and will clear by rendering the _MainTex texture when you let go.

For fun, we’ll add two more functions: Flooring the motion vector UV & using noise to determine what parts of the screen will update!

BLOCKING WITH UV POSITION ROUNDING

In video compression, you don’t have a motion vector for every single pixel since that would be very expensive in size. Instead, motion vectors are assigned to a small blocks of the image that move chunks of pixels according to the general motion. Over a stretch of numerous P frames, this causes more noticeable artifacts, but in it’s intended use with regularly refreshing I frames, it produces similar results to the original file with a huge benefit in compression size.

Datamosh Studio Tutorial

To simulate this, we’ll round the UV positions for reading the motion vector UV. Modify the first lines of the previous shader to redefine how we’ll sample for ‘mot’.

PER-BLOCK NOISE

For basic noise in shaders, I like to use this function:

And implement it into the fragment shader as follows:

I’m going to add one more line to the shader that will cause the motion vector data itself to be lossy. After defining ‘mot’, add this line:

Now you’ll get some very interesting lossy moshy artifacts.

If you enjoyed this tutorial or found it insightful, please consider supporting my Patreon so I can continue to explore more techniques and effects and have the time to write these kinds of tutorials!

You’ll also gain access to exclusive patron-only tutorials and writeups, teasers to upcoming projects, and much more in the near future.

most examples above use Apollo Pavillion model by Rory Peace, used for demonstration purposes

Datamoshing is a form of glitch art which occurs when ‘the I-frames or key-frames of a temporally compressed video are removed, causing frames from different video sequences to bleed together‘.

HOW TO DATAMOSH (See also: PART 2: ENTER THE P-FRAME and PART 3: THE DATAMOSH ACTUALLY HAPPENS)

They guy behind these videos seems to have popularised the technique with a Chairlift music video for Evident Utensil, but Sony would rather you didn’t see it.

Datamosh Online

(Shh, it’s on Metacafe.)

How To Datamosh

AviGlitch is a Ruby library claims to be the easiest way to datamosh a video file. (In this case, ‘easy’ seems to assume you understand the first thing about Ruby.)